(or, how I manage to make ray tracing a bit faster without having one of those fancy new GPUs…)

Crimild’s exiting ray tracing solution (yes, that’s a thing) is still very basic, but it is capable of rendering complex scenes supporting several primitive types (i.e. spheres, cubes, planes). The problem is that it’s too slow, mainly because it’s completely software based, and runs on the CPU. True, it does make use of multithreading to reduce render times, but it’s still too slow to produce nice images in terms of minutes instead of hours.

Therefore, I wanted to take advantage of my current GPU(*) in order to gain some performance and decrease rendering times. Since I don’t own a graphics card with ray tracing support, the only option I had left was to use GPU Compute instead.

(*) My Late 2018 Macbook Pro has a Radeon Pro Vega 20 dedicated GPU, with only 4GB of VRAM.

Requirements

What I needed was a solution that provides at least the following features:

- Physically correct rendering: Of course, the whole idea is to render photorealistic images.

- Short render times: Ray tracing is slow by definition, so the faster an image is rendered, the better.

- Iterative: Usually, I don’t need the final image to be completely rendered in order to understand if I like it. As a matter of fact, only a few ray bounces are required to know that. So, the final image should be composed over time. The more time passes, the better the image quality gets, of course.

- Interactive: This is probably the most important requirement. I need the app to be interactive while the image is being rendered, not only so I can use menus/buttons, but also to allow me to reposition the camera or change the lightning conditions and then check the results of those decisions as fast as possible.

- Scalable: I want a solution that works on any relatively modern hardware. Like I said, my setup is limited.

The current solution (the CPU-based one) is already physically correct, iterative and quite scalable too, but it falls too short on the other requirements. And it is nowhere close to being interactive.

Initial attempts

My first attempts were very straightforward.

Using shaders, I started by casting one ray for each pixel in the image, making it bounce for a while and then accumulating the final color. It was, after all, the very same process I also do in the CPU-based solution, but now triggering thousands of rays in parallel thanks to “The Power of the GPU”.

Render times did drop considerable with this approach, generating images in a matter of seconds instead of minutes. And it seemed like the correct solution. But it had one major flow: it was not scalable and, most importantly, it wasn’t even stable.

For simple scenes, everything was ok. Yet, the more complex the scene became, the more computations the GPU has to do per pixel and per frame. That can cause the GPU to be stalled completely, eventually crashing my computer (yes, Macs can crash).

So, a different approach was needed.

Think different

I took a few step back, then.

I knew that the most important goal was not to produce image faster (keep reading), but to keep the simulation interactive, and stable too. That meant running it at more than 30 fps (or more, if possible), leaving the time budget for a single frame to be 33ms (or less).

In short, I needed to constraint the amount of work we do in each frame, even if that means the final image might take a bit longer to render. It will still be faster than the CPU-based approach, of course.

After some thought, I came up with a new approach: for each frame, only a single ray per thread is computed. Let me repeat that: compute one ray per frame, not one pixel. Obviously, producing the final color for a single pixel will require multiple frames but that’s fine.

How does it work?

- Given a pixel, we cast a ray from camera to that pixel.

- We compute intersections for the current ray

- If the ray hits an object, the material color is accumulated and a new ray is spawn based on reflection/refraction calculations. The new ray is saved in a bag of rays for a future iteration.

- The next frame, we get one ray from the bag and compute the intersections for it, spawning another ray if needed.

- If there are no more rays in the bag for that pixel, then we can accumulate the final color and update the image.

- And then we start over for that pixel.

And that’s it.

Notice that I mentioned that we grab any ray from the bag. I don’t care about the order in which they were created. Eventually, we’ll process all the rays so there’s really no need to get them in order. Even if more rays are generated, the final image will converge.

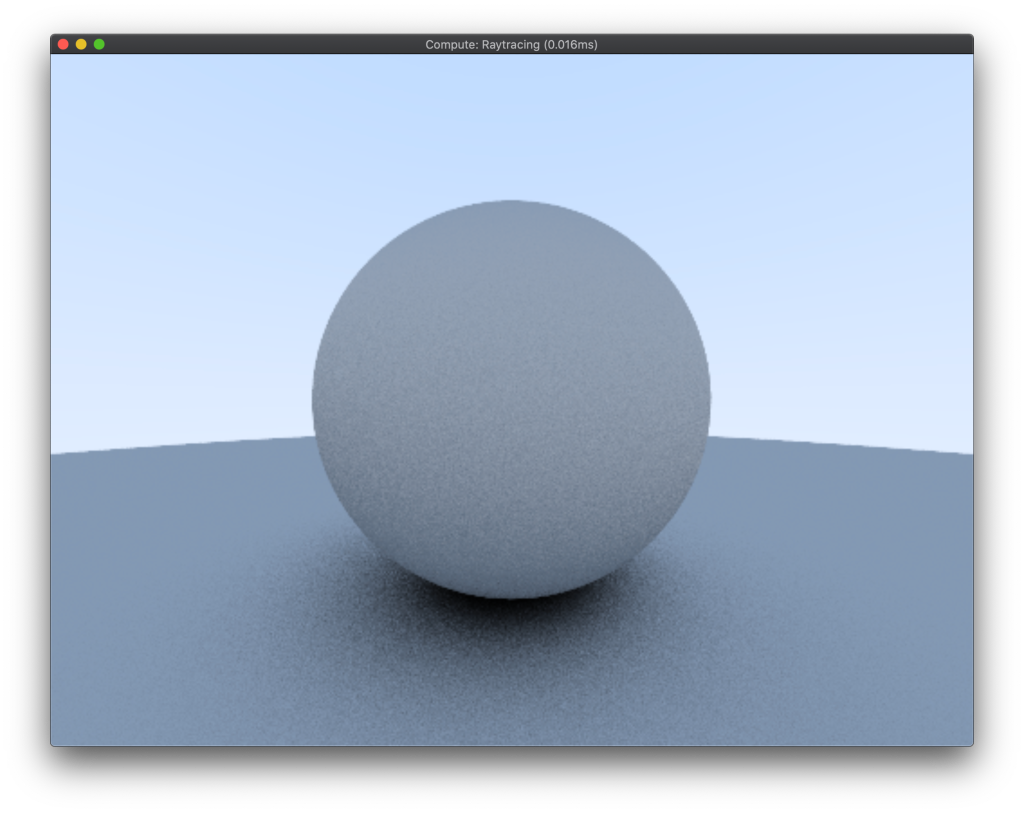

Results

This is indeed a very scalable solution, since we can choose how many threads to execute in the GPU each frame: 1, 30, 500, 1000 or even one per pixel in the image if the scene is not too complex or we have a powerful setup.

Of course, the less threads we execute, the more time the image will take to complete. But it still takes less than a minute to provide a “good enough” result in my current computer.

Next steps

There’s always more work to do, of course.

Always.

Beyond of adding new features to the ray tracer (like new shapes or materials), there is still room for optimization. For example, I’m not using any acceleration structure (i.e. bounding volume hierarchies) like the CPU-based approach does. Once I do that, performance will be even better.

And there is the problem of the noise in the final image, but I don’t really have a good solution for that yet.

See you next time!