Oh, look, another post about Metal.

Don’t worry, I promise this will be the last one, at least for a while.

In this final post in the Metal series, I’ll make a summary of what we’ve achieved so far, the good and bad decisions, and some forecast for future improvements.

Let’s finish this…

What went right?

Working on an actual project instead of a demo. The first Metal demo was lacking a lot of features and was more of a test drive that helped me understand how the API worked. On the other hand, Le Voyage was already published by the time I start porting it to Metal, so whatever I ended up implementing had to support a minimal set of functionalities if I wanted to be part of the game. In addition, it helped me to focus on specific features rather than go with a more generic implementation. For example, there was no need for skeletal animation, so I skipped that feature completely.

Metal benefits showed up from the very beginning. Metal started to show its strength as soon as I completed the most basic implementation. The frame rate was up to at least 50% and the game felt much more fluid, even with all effects turned on and running at Retina resolutions. And this was before any optimization were even considered.

Crimild’s design was up to the task and did not require any major modifications. Honestly, this was a nice surprise. When I started working with Metal, one of my biggest concerns had to do with what changes were required in Crimild (specially at core-level libraries) in order to support the new paradigm. This, in turn, might have side effects on other implementations, which are [supposed to be] much more stable. But it turned out that it was possible to encapsulate all Metal hacks code within the Renderer itself. No major design changes were required and I was really happy about that.

Switching between OpenGL and Metal in iOS is done with a single flag. This was critical in order to understand how well the new renderer performed. The CrimildViewController class has a single flag that defines whether or not to use Metal. Most importantly, it will fallback to OpenGL automatically for those platforms that don’t support Metal.

Metal shaders are a win. I love the way Metal works with shaders and since they are precompiled along with the rest of the application, there’s no need for extra error handling at run-time. And the errors are really easy to understand.

GLSL translated fairly straightforward to Metal’s shading language. The language itself is a bit different, since MLSL is based on C++11 instead of ANSI C, yet the logic behind the shaders is pretty much the same. And the MLSL Standard Library provides much of the tools that are available in GLSL too. I’m still not sure how to achieve a level of abstraction for writing shaders regardless of the platform, thought (as I’m doing with GLSL).

Image effects are easier to work with on Metal. Handling FBOs turned out to be much easier than in OpenGL. The API is a lot less verbose and error checking is much more straightforward. This lead to a more simpler way to achieve post-processing effect. And, as I mentioned in a previous post, I still need to use one of Metal features to avoid using a vertex or fragment shader function if not needed with should make things even easier.

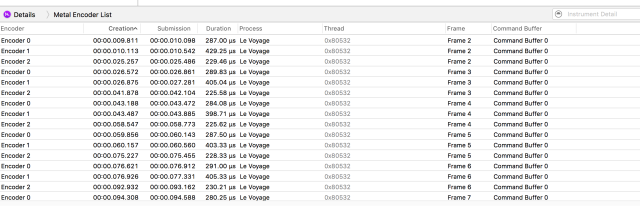

The tools. Both Xcode and Instruments were upgraded with a set of tools that made my life a lot easier when profiling and optimizing Le Voyage. The Frame Navigator and Shader Profiler were particularly useful for finding those bits of code that were causing the most performance problems.

It took me more time to write these posts than to actually implement the whole thing. I’m not a fast writer, that’s true. Still, implementing the entire rendering solution based on Metal was a lot easier than what I originally estimated. The API is really well documented and the example code provided by Apple is clear and easy to follow.

What went wrong?

Metal only work on selected platforms. This is my main concern for Metal, since it only works on selected Apple devices. For what I’ve seen so far, working with Metal is pretty similar as working with Vulkan, but we still need to maintain both APIs. And OpenGL too, of course

The Metal API require us to do more things than in OpenGL. While it’s true that some functionalities were easier to implement in Metal, most of the time the API was more verbose than it’s counterpart in OpenGL. But this was expected, due to Metal is supposed to be a more low level API. On the plus side, the mechanisms that required more code usually where invoked only once at the beginning or at very low rates.

FBOs are required for all render passes. The Metal Renderer assumes at least a forward-like render pass, with support for offscreen frame buffers by default. This leaves out Crimild’s basic render pass that is used for rendering scenes that don’t require too much graphic complexity.

Translation between Metal and Crimild object was not always straightforward. I said before that there was no need for major design changes in Crimild. That was one of the design goals from the beginning. But in order to keep this statement true, I ended up doing some weird conversions and hacks, like assigning render pipelines to shader programs instead of materials.

Uniform buffers could have been handled in better way. I said it before and I’m saying it again: I really don’t like the way I ended up implementing uniform buffers in Crimild. Probably, it was due to the lack of experience on my side but this is definitely a point of improvement for further versions.

No C API for Metal. The Metal API is completely written in Objective-C, which lead to some weird code and argument passing in the final implementation. It never represented a problem since I’ve worked with mixed code before, but the code looks funny. The object-oriented API design is a plus, thought.

What’s next?

I assume there’s going to be several improvements for Crimild’s Metal support in the near future as I start using it for other projects. Again, I don’t like the way uniforms are handled, so that’s going to be my first focal point for sure.

In addition, I’m planning on supporting deferred rendering in Metal and improve the lighting model to support PBR at some point, too.

Finally, at WWDC 2016 last week Apple announced some new features for Metal that I would love to include in future versions. For example, Metal now supports dynamic polygon tessellation. In concept, it seems similar to OpenGL’s geometry shaders and I know for a fact that in order to support such feature I need to change the shader design in Crimild. But that’s on my wish list too.

Final words

Well, that’s it. I’m done.

I’ve never wrote this much about anything before, so this was a clear achievement for me, beyond Metal itself. I can’t promise that I will keep this pace for future Crimild features, thought. So don’t get too excited.

As usual, feel free to look at the code in Github and provide comments. Crimild’s Metal support is still experimental (look for the devel branch), but it will be included in the next released anyways. And if you wanted to see it in action, go and download Le Voyage in the App Store if you haven’t played it already.

All in all, it has been a great journey. I was hyped by Metal from the beginning but I wasn’t expecting such amazing results considering the small amount of time that I invested.

Now that I know the power of Metal, I can’t wait to get my hands on Vulkan…