I knew from the start that supporting PBR (Physically-based rendering) was going to be one of the biggest (and hardest) milestones of the rendering system refactor. The fact that I’m finally starting working on it (and also writing about it) indicates that I’m seeing the finish line after all this time.

There’s still a lot of work ahead, though.

Supporting PBR is not an easy task in any engine, since it involves not only creating new shaders and materials from scratch, but also to perform several render passes before and after the actual scene is rendered in order to account for all of the lighting calculations. Some of those will make things a bit faster in later stages, like pre-computing irradiance maps, while others will improve the final render quality, like tone mapping.

The road is long, so I’m going to split the process into several posts. In this one, I’ll focus on HDR and Bloom.

HDR

Adding support for HDR was the natural first step towards PBR, since it’s extremely important in order to handle light intensities, which can easily go way above 1.0. Wait! How come light intensities can have values beyond 1 if that is the biggest value for an RGB component? Think about the intensity of the sun compared with a common light bulb. Both are light sources, but the Sun if a lot brighter than the light bulb. When calculating lighting values using a method such as Lambert or Phong, both of them will end up having the maximum possible value (that is, of course, 1 or white), which is definitely not physically correct.

HDR stands for “High Dynamic Range” and it basically means we are going to use 16 or 32 bits float values for each color component (RGBA) in our frame buffer. That obviously leads to brighter colors because the values are no longer clamped to the [0, 1] interval, as well as more defined dark areas because of the improved precision.

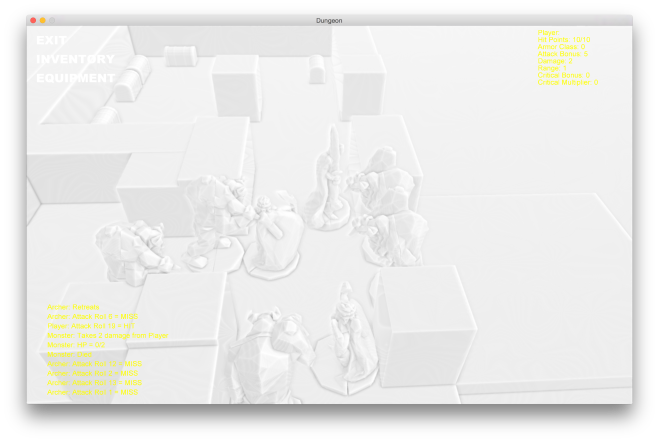

As an example, this is what a scene looks like without HDR when having lights with values above 1.0:

The above scene consists of a long box with a few lights along the way. At the very end we have one more light with a huge intensity value (around 250 for each component). What’s important to notice in this image is that we’re almost blinded by the brightest light and there’s no much else visible. In fact, we can barely see the walls of the box.

Now, here’s how the same scene looks like with HDR enabled:

The light at the end is still the brightest one, but now we can see more details in the darker areas, which makes more sense since our eyes end up adjusting to the dark in real world.

Tone Mapping

At this point you might be wondering how those huge intensity values translate to something that our monitors can display, since most of them still work with 8-bit RGB values.

Once we compute all of the lighting in HDR (that might include post-processing too), we need to remap the final colors to whatever our monitor supports. The process is called tone mapping, and it involves a normalization process which maps HDR colors to LDR (low definition) colors, applying some extra color balance in the process to make sure the result looks good.

There are many ways to implement tone mapping depending on the final effect we want to achieve. The one I’m using is the Reinhard tone mapping algorithm, which balances all bright lights evenly and applies gamma correction.

Bloom

While not really a requirement with PBR, but at this point it was really easy to implement and probably the best way to test HDR colors.

Bloom is an image effect that produces those nice auras around bright colors, as if they were really emitting light.

Below, you can see the same scene with and without bloom:

The technique require us to filter colors in the image using a threshold value. If we’re using HDR, it’s as simple as keeping the colors that are equal or greater to 1.0. Then, we blur the image as many times depending on the desired effect (the more blur we applied, the bigger the final aura around objects). Finally, we blend both the original scene image and the blurred one together. That’s it.

Next up…

In the next post I’m going to talk about the new PBR materials and shaders and how them approximate the rendering equation in real-time.